Release Testing is high cost, low value risk management theatre

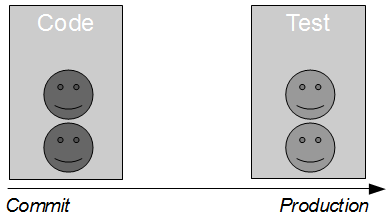

Described by Elisabeth Hendrickson as originating with the misguided belief that “testers test, programmers code, and the separation of the two disciplines is important“, the traditional segregation of development and testing into separate phases has disastrous consequences for product quality and validates Jez Humble’s adage that “bad behavior arises when you abstract people away from the consequences of their actions“. When a development team has authority for changes and a testing team has responsibility for quality, there will be an inevitable increase in defects and feedback loops that will inflate lead times and increase organisational vulnerability to opportunity costs.

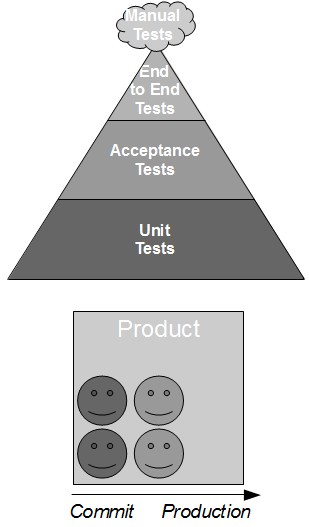

Agile software development aims to solve this problem by establishing cross-functional product teams, in which testing is explicitly recognised as a continuous activity and there is a shared commitment to product quality. Developers and testers collaborate upon a testing strategy described by Lisa Crispin as the Testing Pyramid, in which Test Driven Development drives the codebase design and Acceptance Test Driven Development documents the product design. The Testing Pyramid values unit and acceptance tests over manual and end-to-end tests due to the execution times and well-publicised limitations of the latter, such as Martin Fowler stating that “end-to-end tests are more prone to non-determinism“.

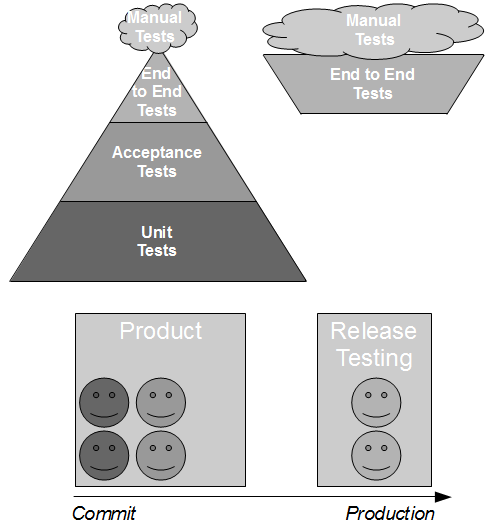

Given Continuous Delivery is predicated upon the optimisation of product integrity, lead times, and organisational structure in order to deliver business value faster, the creation of cross-functional product teams is a textbook example of how to optimise an organisation for Continuous Delivery. However, many organisations are prevented from fully realising the benefits of product teams due to Release Testing – a risk reduction strategy that aims to reduce defect probability via manual and/or automated end-to-end regression testing independent of the product team.

While Release Testing is traditionally seen as a guarantee of product quality, it is in reality a fundamentally flawed strategy of disproportionately costly testing due to the following characteristics:

- Extensive end-to-end testing – as end-to-end tests are slow and less deterministic they require long execution times and incur substantial maintenance costs. This ensures end-to-end testing cannot conceivably cover all scenarios and results in an implicit reduction of test coverage

- Independent testing phase – a regression testing phase brazenly re-segregates development and testing, creating a product team with authority for changes and a release testing team with responsibility for quality. This results in quality issues, longer feedback delays, and substantial wait times

- Critical path constraints – post-development testing must occur on the critical path, leaving release testers under constant pressure to complete their testing to a deadline. This will usually result in an explicit reduction of test coverage in order to meet expectations

As Release Testing is divorced from the development of value-add by the product team, the regression tests tend to either duplicate existing test scenarios or invent new test scenarios shorn of any business context. Furthermore, the implicit and explicit constraints of end-to-end testing on the critical path invariably prevent Release Testing from achieving any meaningful amount of test coverage or significant reduction in defect probability.

This means Release Testing has a considerable transaction cost and limited value, and attempts to reduce the costs or increase the value of Release Testing are a zero-sum game. Reducing transaction costs requires fewer end-to-end tests, which will decrease execution time but also decrease the potential for defect discovery. Increasing value requires more end-to-end tests, which will marginally increase the potential for defect discovery but will also increase execution time. We can therefore conclude that Release Testing is an example of what Jez Humble refers to as Risk Management Theatre – a process providing an artificial sense of value at a disproportionate cost:

Release Testing is high cost, low value Risk Management Theatre

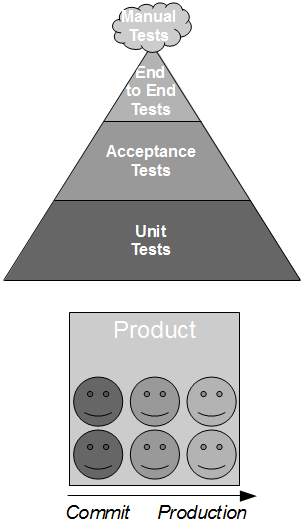

To undo the detrimental impact of Release Testing upon product quality and lead times, we must heed the advice of W. Edwards Deming that “we cannot rely on mass inspection to improve quality“. Rather than try to inspect quality into each product increment, we must instead build quality in by replacing Release Testing with feedback-driven product development activities in which release testers become valuable members of the product team. By moving release testers into the product team everyone is able to collaborate in tight feedback loops, and the existing end-to-end tests can be assessed for removal, replacement, or retention. This will reduce both the wait waste and overprocessing waste in the value stream, empowering the team to focus upon valuable post-development activities such as automated smoke testing of environment configuration and the manual exploratory testing of product features.

A far more effective risk reduction strategy than Release Testing is batch size reduction, which can attain a notable reduction in defect probability with a minimal transaction cost. Championed by Eric Ries asserting that “small batches reduce risk“, releasing smaller change sets into production more frequently decreases the complexity of each change set, therefore reducing both the probability and cost of defect occurrence. In addition, batch size reduction also improves overheads and product increment flow, which will produce a further improvement in lead times.

Release Testing is not the fault of any developer, or any tester. It is a systemic fault that causes blameless teams of individuals to be bedevilled by a sub-optimal organisational structure, that actively harms lead times and product quality in the name of risk management theatre. Ultimately, we need to embrace the inherent lessons of Agile software development and Continuous Delivery – product quality is the responsibility of everyone, and testing is an activity not a phase.

Excellent article, Steve.

Release Testing is one of the ‘attractive’, alluring technical practices which seems to make sense initially to managers from a risk viewpoint, but which if anything *increases* risk by making quality and testing “someone else’s problem”.

Release Testing does not scale: as the software produced by an organisation becomes both larger and more fundamental to that organisation’s strategy (revenue, communication, etc.), with Release Testing in place it becomes more and more difficult to release software, and more and more frustrating for developers, testers, and budget-holders, as the ‘downward spiral’ of ever-larger releases leads to more (not fewer) bugs and more disgruntled customers.

Release Testing is in effect a sticking plaster over an open wound – it’s the wound which needs healing, and the sticking plaster only makes the problem worse in the end.

I’ve noticed that as an organization expands to have multiple teams working on a product, release testing is introduced. The organization continues to add teams, and it becomes more difficult to “remove” or diminish release testing even though release testing is slowing down the organization’s ability to deliver value. It’s an interesting cycle.

Hi Alison

Are you suggesting organisations introduce Release Testing to solve scalability concerns? If so… good grief. That will only achieve the opposite of what you are after.

Release Testing is very hard to improve, as it requires a level of transparency and courage within an organisation that is often lacking. I find it particularly frustrating when people acknowledge it is a problem, but then do nothing about it.

Thanks for commenting

Steve

Hi Matthew

Thanks for commenting. I’m not sure I agree Release Testing is attractive or alluring. I see it more as a) the status quo in large organisations – many people still believe in the segregation of Development and Testing, or b) an artifact of an Agile transformation that failed to permeate throughout an organisation.

You are entirely right than Release Testing indirectly increases risk due to increased feedback loops/rework, but I believe the bigger issue is that it *cannot* reduce the risk of production defects without incurring an increased transaction cost. That really is risk management theatre.

Scalability of Release Testing is something I omitted, but as development teams increase in number it’s certainly possible for a Release Testing team to become an ever-more visible bottleneck – which sadly is often “solved” by adding more people to the Release Testing Team, which means Brook’s Law comes into play.

Thanks

Steve

Ah, I meant that Release Testing is initially attractive or alluring to managers looking to ‘manage risk’ as the software release process gets bigger/more complicated, and this initial attractiveness as a possible ‘silver bullet’ to kill quality woes is one reason why it takes hold in organisations. Another reason is a lack of understanding of how systems work (systems thinking), but that’s another story 🙂

Release Testing is certainly *not* attractive or alluring, but too many orgs seem to realise this either too late or never at all.

Great article Steve. I think release testing is harmful for all the reasons you list plus the fact that it produces large numbers of bug reports which don’t obviously belong to a development team. Bugs being raised by people outside the development teams are also unlikely to take in to account the prioritisation process required in Agile. Getting hung up on trying to produce perfect software is the fastest way to create project delays.

As a tester my role is to find and share information about the product. Successful CD results in small batch sizes and frequent releases. Automated testing and cross-functional teams will usually give you enough confidence to be able to ship a decent product but you certainly won’t have done enough testing to have a full picture of the quality. I believe that to carry out decent testing you will need to be performing some sort of integration testing on the whole product. Depending on team size and schedule this may be something that can happen within the cross-functional teams but I don’t think it is particularly damaging to have it as a separate test team provided everyone understands that they are a specialist test team with a very clear objective. Security testing for example would fit this model without necessarily needing to prevent CD, depending on the risk to the company it might be enough to know that something has been broken rather than to prevent something from breaking.

Every project is different and I think the problem is that many people adopt team set ups and practices without really considering why. Understanding how other people work is vital for emulating success but at the end of the day there are no best practices.

Hi Amy

Thanks for replying. You’re right about the extraneous bug reports raised by a Release Testing team – they are a smell that a team is under pressure to find some defects, somewhere – but in my experience they tend to be very minor defects. Serious flaws in a product tend to be uncovered during unit/acceptance testing or exploratory testing (none of which can occur within a Release Testing team due to critical path constraints).

I dislike the term “integration testing”, but I agree with your sentiment that there needs to be a holistic assessment of product quality off the critical path. I am still *very* wary of creating dedicated security, performance teams etc. as I believe it again breaks authority and responsibility. From the start of LMAX it was decreed there would be no dedicated performance team, and that performance was the responsibility of everyone – that culture can really work, but it is hard to retrofit it later on. Perhaps security specialists could be attached to the product team for a period of time.

Thanks very much

Steve

What seems to be a little bit missing here is HOW does a company that WAS using waterfall, and has (kinda) switched to some sort of water-Ag-fall hybrid that still has release testing, switch over (magically, in mid-stream?) to –

a comprehensive, automated, unit-based suite of tests (would need to be multiple suites actually as we have many APIs) that:

1. Runs nightly at a minimum

2. On every platform (with both x86 and x64 user applications executing at the same time on 64-bit platforms)

3. Is constantly added to during development by developers, in the language they use to add features and in the same codebase

4. Has all tests evaluated for completeness by developers and testers (ideally other stakeholders as well)

5. Has the authority to enforce a task/change is incomplete until doneness is satisfied

6. Is supplemented with automated end-to-end tests using real world customer use cases and tools

7. Reports back to stakeholders in a meaningful and non-ignorable way

while carrying on business, not disappointing customers (who, in turn, compete in their industries using our products), and not letting any killer bugs out the door where lives and livelihoods (and trillions of dollars of transactions, and state secrets, and…) all depend on the security and robustness of the released product?

From here, it looks a wee bit like crossing a chasm in two leaps.

Hi Kevin

Thanks for that.

It’s a fair criticism that I don’t go into a lot of detail on the antidote to Release Testing, or how to build up an extensive suite of automated tests.

When I’m writing, I try to practice what I preach about batch size reduction and only ever write about one thing. In this particular case, my focus was upon the problems introduced by Release Testing and a potential solution. A lot of stuff was cut out, for example on the history of quality inspection.

For more details on how to create a really effective suite of automated tests that follow the Testing Pyramid, check out the Continuous Delivery book by Dave Farley and Jez Humble if you haven’t already – there’s a whole chapter on it.

Thanks again

Steve

Often the challenge is how to wean your organization off the Release Testing model toward continuous delivery. I believe smaller development batch sizes, relentless focus on automation, cross-functional teams, and real-time communication and reporting associated with each revision candidate as it travels through the deployment pipeline, can be the best first steps.

-Dennis Ehle, CEO http://www.cloudsidekick.com

Hi Dennis

Thanks for that. I’d entirely agree with your recommended steps – a smaller batch size in particular will highlight the lack of defects found in release testing

Thanks

Steve